- #TALEND REDSHIFT UNLOAD EXAMPLE HOW TO#

- #TALEND REDSHIFT UNLOAD EXAMPLE PDF#

- #TALEND REDSHIFT UNLOAD EXAMPLE INSTALL#

Whatever the credentials you configure is the environment for the file to be uploaded. You need to have AWS CLI configured to make this code work. % (schema ,table ,s3_bucket_nameschema ,table ,aws_access_key_id ,\Įxample 3: Upload files into S3 with Boto3 MANIFEST GZIP ALLOWOVERWRITE Commit """ \ format (dbname ,port, user ,password ,host_url )

'''This method will unload redshift table into S3'''Ĭonn_string = "dbname=''"\

#TALEND REDSHIFT UNLOAD EXAMPLE INSTALL#

You need to install boto3 and psycopg2 (which enables you to connect to Redshift). In the Access Key field, press Ctrl + Space and from the list select context.s3accesskey to fill in. The code examples are all written 2.7, but they all work with 3.x, too. In this example, it is E:/Redshift/redshiftbulk.txt. For example, if you want to deploy a Python script in an EC2 instance or EMR through Data Pipeline to leverage their serverless archtechture, it is faster and easier to run code in 2.7. It contains many useful tips and describes the features of each component. The Talend Component guide, available in the Talend Help Center, provides a complete description on the use and configuration of each Amazon Redshift component.

#TALEND REDSHIFT UNLOAD EXAMPLE HOW TO#

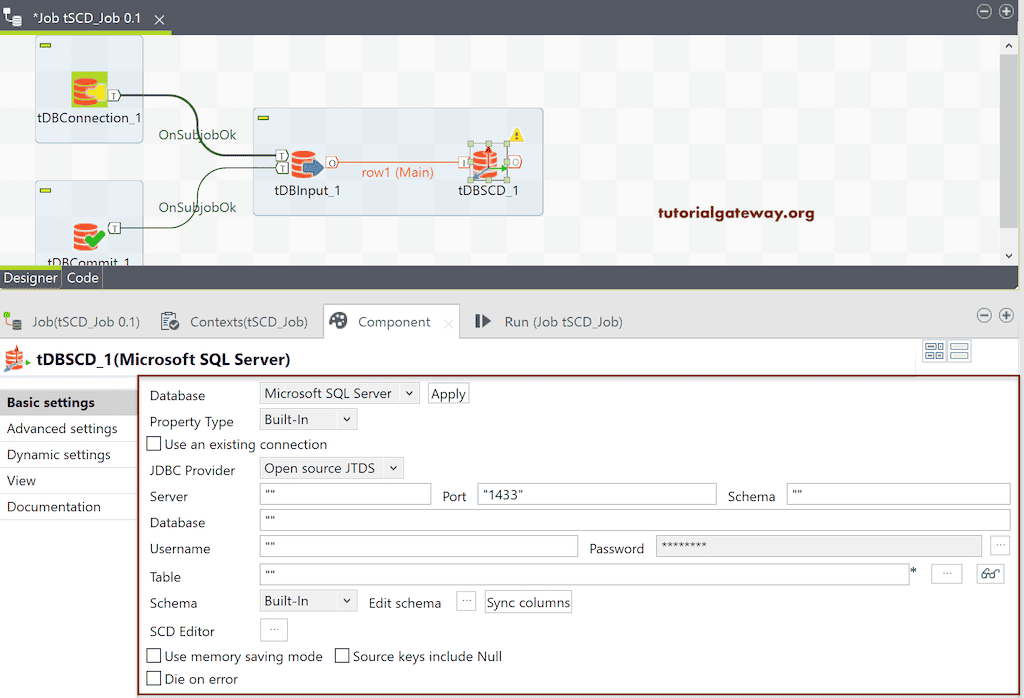

This article uses the CData JDBC Driver for Redshift to transfer Redshift data in a job flow in Talend. The best practice is to use Parquet files in ML and big data projects. This article from AWS provides detailed explanations and a simple policy example: How to Prevent Uploads of Unencrypted Objects to Amazon S3. This article shows how you can easily integrate the CData JDBC driver for Redshift into your workflow in Talend. When it comes to AWS, I highly recommend to use Python 2.7. Integrate Redshift data with standard components and data source configuration wizards in Talend Open Studio. I usually encourage people to use Python 3. Boto3 (AWS SDK for Python) enables you to upload file into S3 from a server or local computer. The code for Snowflake Unload to S3 using the stage is given below. You can also unload data from Redshift to S3 by calling an unload command. A Python library to assist with ETL processing for: Amazon Redshift (COPY, UNLOAD). You can upload data into Redshift from both flat files and json files. The COPY command examples demonstrate loading from different file formats, using several COPY command options, and troubleshooting load errors.

#TALEND REDSHIFT UNLOAD EXAMPLE PDF#

The best way to load data to Redshift is to go via S3 by calling a copy command because of its ease and speed. AWS Documentation Amazon Redshift Step 5: Run the COPY commands PDF RSS You run COPY commands to load each of the tables in the SSB schema.

Python and AWS SDK make it easy for us to move data in the ecosystem. AWS offers a nice solution to data warehousing with their columnar database, Redshift, and an object storage, S3.

0 kommentar(er)

0 kommentar(er)